The robot buggy was tested at Rix Inclusive Research to see how well it worked (in principle) and although the controller (designed by Levi) communicated well with the Robot and commands were successfully sent to the robot buggy itself via BlueTooth, the buggy was reluctant to move in a straight line.

DC motors are never quite the same in terms of their performance, so one was clearly stronger than the other resulting in the buggy turning right when it should have moved straight.

To counter the effect of dissimilar motors, a pair of trimmers were added (10K pots) that were used to add a small amount of ‘trim’ to each motor – speeding the motor up or down according to what was required. Although partially successful, it was quite difficult to get consistent straight line performance.

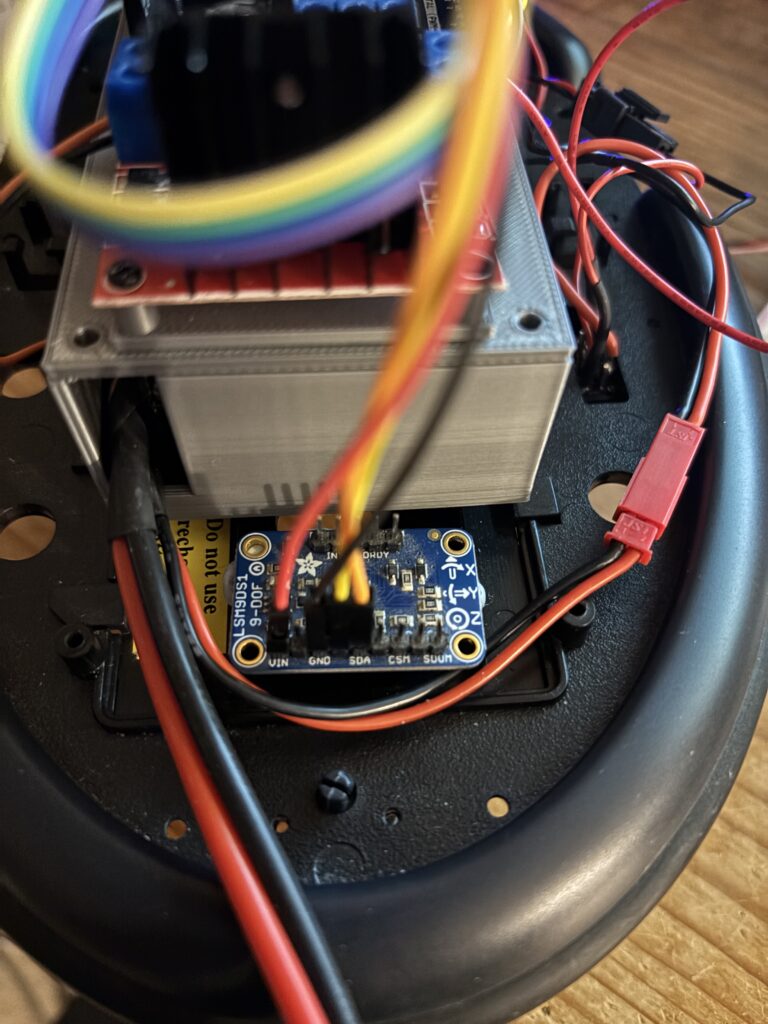

To try and persuade the buggy to move in a straight line, an LSN9DS1 accelerometer/gyro module was added to help control the movement, constraining the movement. This calculates yaw and corrects movement away from a straight line, which is looking promising although some final tuning is required.

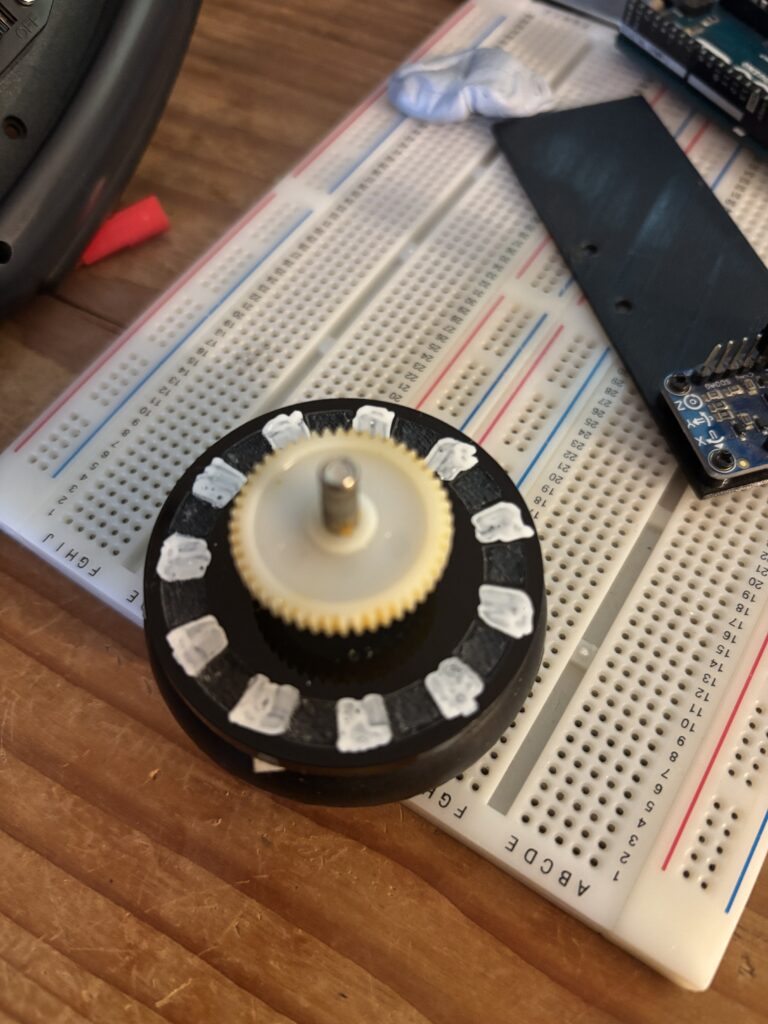

Another upgrade in the pipeline is the addition of wheel encoders. Due to the space limitations of the existing wheels and motors, the encoders needed to be reflective. To enable this, two disks of acrylic were cut and engraved so that an IR LED would reflect from the shiny surface (painted with a white marker) and not reflect where the acrylic was engraved (in between the white marks):

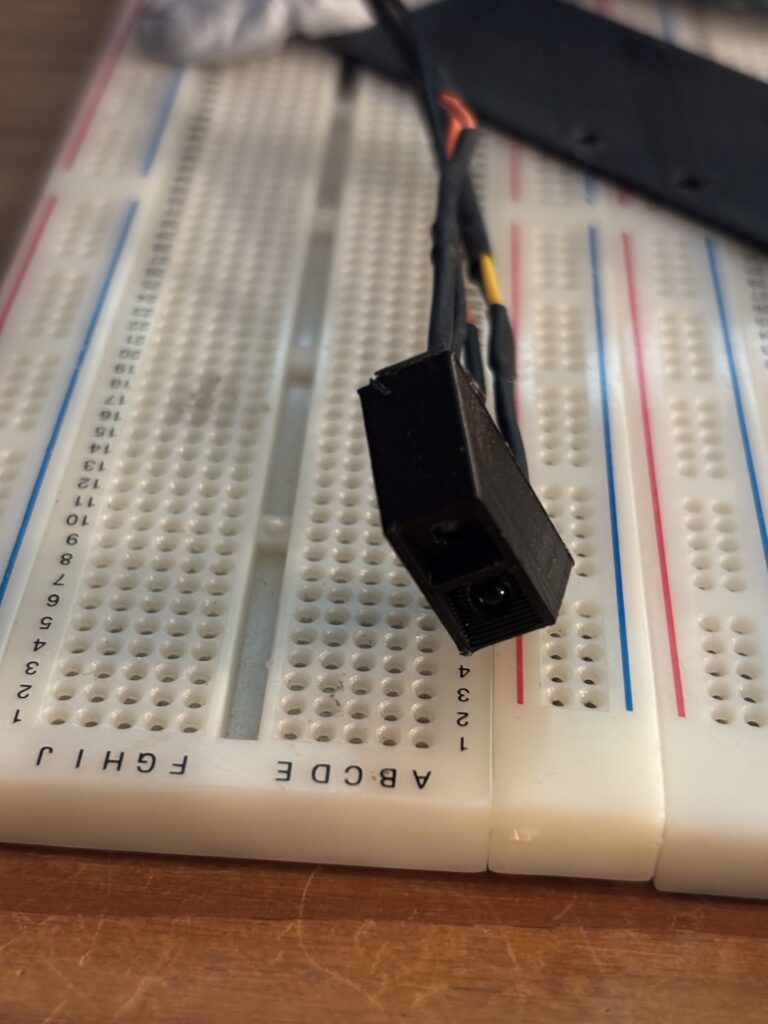

The encoders themselves were 3D printed form PLA and each contains an IR LED and photo transistor set at 45 degrees:

These have yet to be tested, but the idea is to get better control of the forward motion. The buggy needs to move a fixed distance each time a forward command is encountered. Currently, the forward movement is controlled using a simple timer. It’s not very accurate!

Leave a Reply